GIOS Lecture Notes - Part 2 Lesson 1 - Process and Process Management

What is a Process

- Simple definition - an instance of an executing program. Sometimes called a task or a job.

- OS manages hardware on behalf of applications

- Application == program on disk, flash memory, etc. A static entity.

- Process == state of a program when executing loaded in memory. An active entity

- Execution state of an application

- Same application can be run multiple times, each as its own process

What does Does a Process Look Like

- A process encapsulates all of the state of a running application

- Address space is an OS abstraction to capture all of this state.

- Types of state:

- text and data

- static state when process first loads

- heap

- dynamically created during execution

- stack

- grows and shrinks

- LIFO queue

Process Address Space

- Address space == ‘in memory’ representation of a process

- V0 to Vmax represent the range of virtual addresses used by process

- Called virtual because it does not necessarily need to correspond to physical memory locations

- Physical addresses are locations in physical memory (DRAM)

- Hardware and OS maintain a mapping from physical memory to virtual memory

- Page tables == mapping of virtual to physical addresses

Address Space and Memory Management

- Not all processes require all address space from V0 to VMax

- Parts of virtual address space may not be allocated

- May not be enough physical memory for all state

- OS dynamically decides which portion of which address space will be stored in physical memory, and where.

- Multiple processes may share physical memory

- All such process may have portions of their state swapped out to hard disk, to be brought in if and when needed

- OS may also perform memory access validity checks to ensure that a process has permission to access the specific memory it is trying to

Process Execution State

- How does the OS know what a process is doing?

- Before a process can execute it must be compiled into binary

- OS must know where in a process’ binary instructions it is. This is done with a program counter (PC)

- Kept in a CPU register

- Other registers keep other information about other pieces of state

- Also need a stack pointer, keep track of where the top of the stack is, to track that component of state

- OS maintains a process control block (PCB) to track miscellaneous other peices of state.

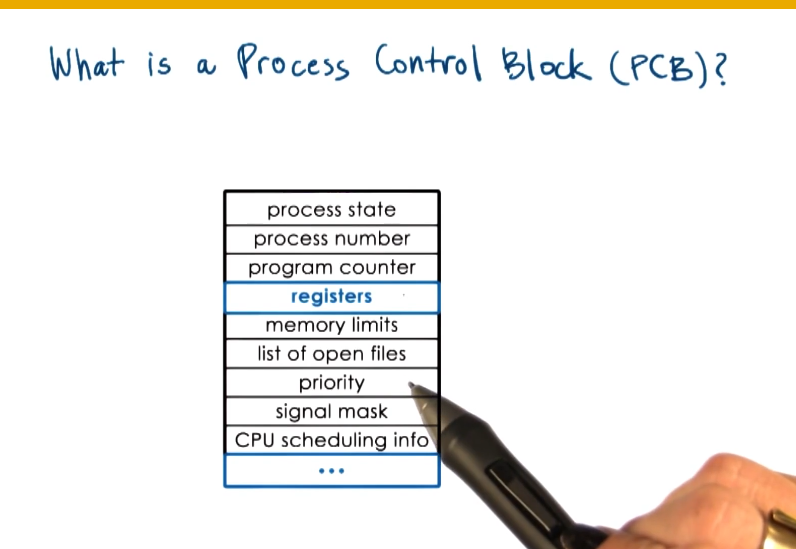

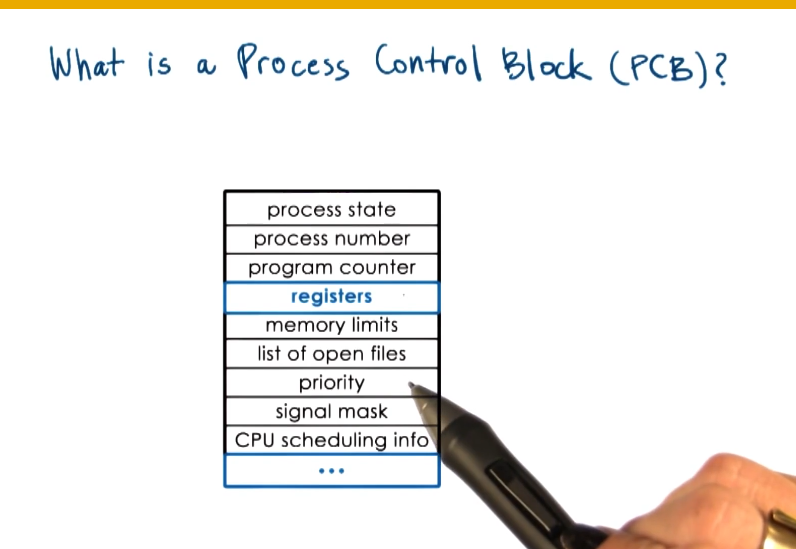

What is a Process Control Block

- A PCB is maintained for every process being managed by OS

- PCB is created and initialized when process is created

- Certain fields are updated as relevant peices of state change

- Other fields change too frequently for that, so CPU keeps dedicated register for those, such as PC register.

- OS must collect and save PCB for every process whenever that process is no longer running on the CPU

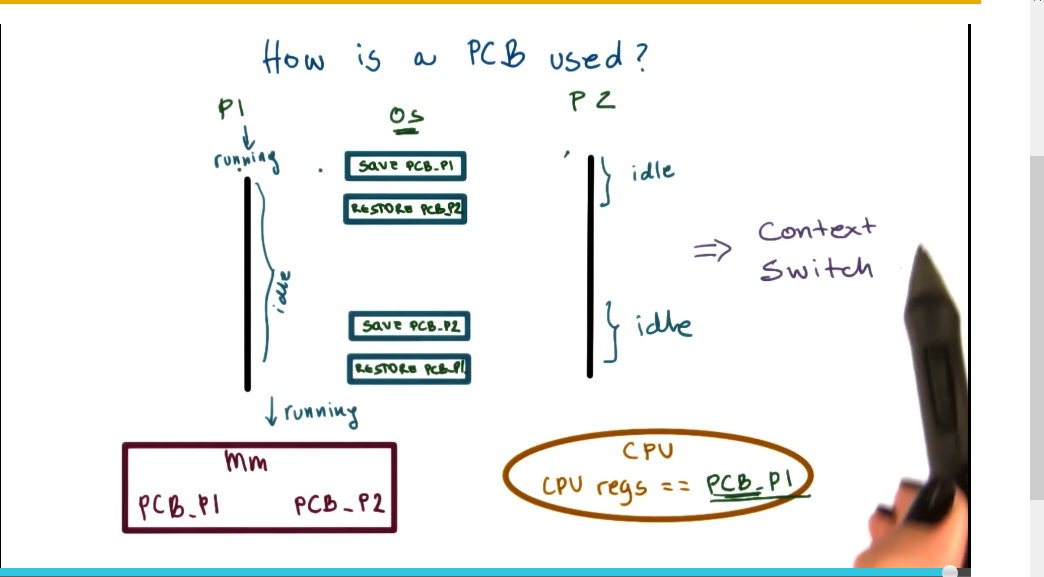

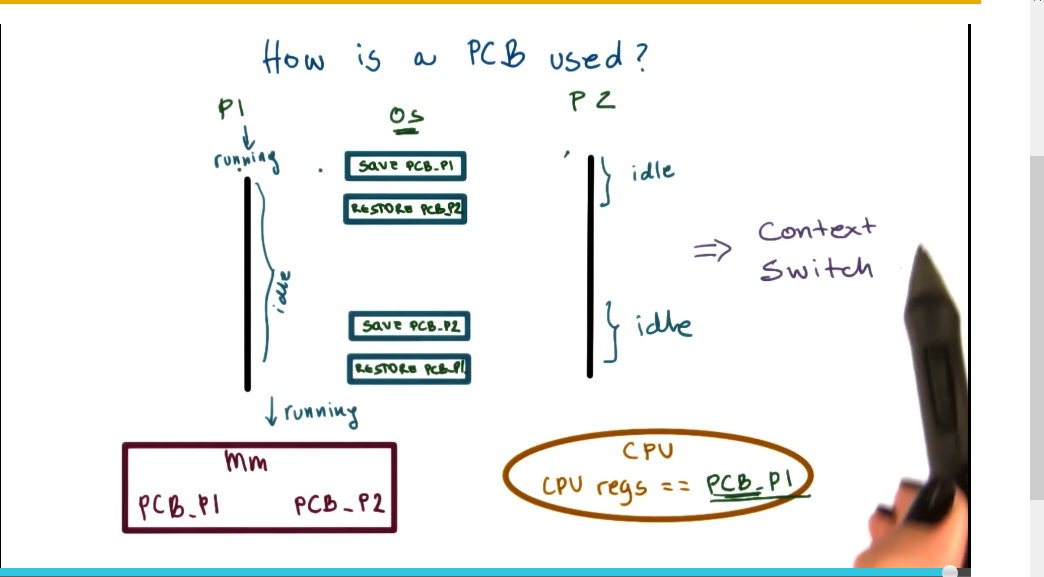

How is a PCB Used?

- Whenever there is a context switch between processes, state is saved from and loaded to the PCB

What is a Context Switch

- Context switch == switching the CPU from the context of one process to the context of another

- Expensive

- Direct costs: number of cycles for load and store instructions

- Indirect costs: Cold cache and cache misses. Reading multiple levels of cache down, and maybe even out of memory.

- As a result we want to limit frequency of context switching!

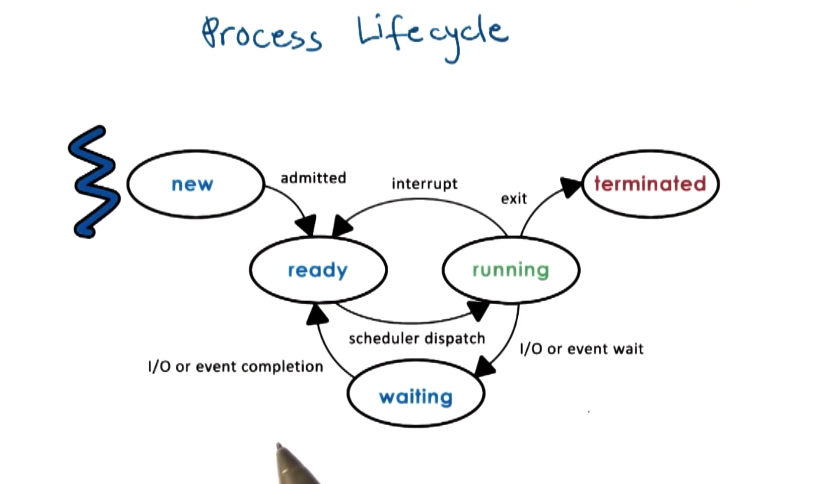

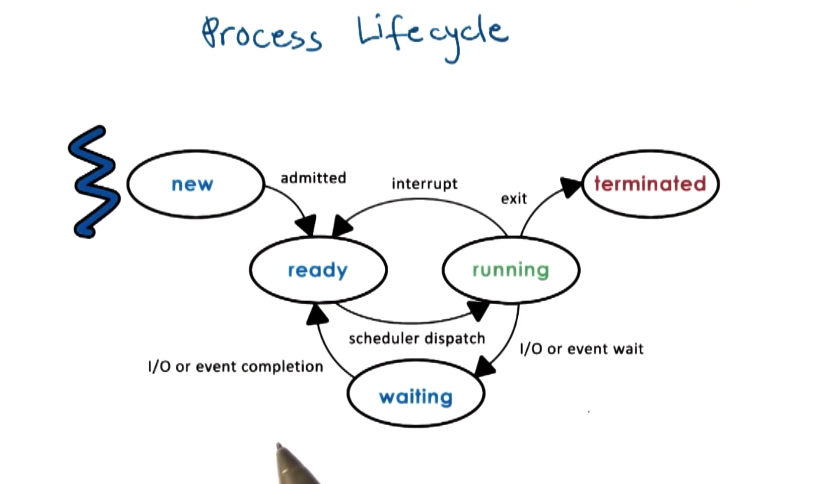

Process Lifecycle

- Processes can be in any of a number of states

- Process Creation

- Processes can create new processes, and all processes forked off from the same original parent – init(1) on Unix, ZYGOTE for Android apps

- Some processes will be privileged, boot processes. When a user logs in, a user shell process is created, and new processes are spawned from that shell.

- Two main mechanisms for process creation

- Fork

- copies the parent PCB into new child PCB

- child continues execution at instruction after fork – same PCB means same program counter, so keep counting forward from same spot

- Exec

- replace child image, loads new PCB

- load new program and start from first instruction in that program’s counter

- Often used in combination, to create new child program with fork and then tee up something completely different than parent with exec

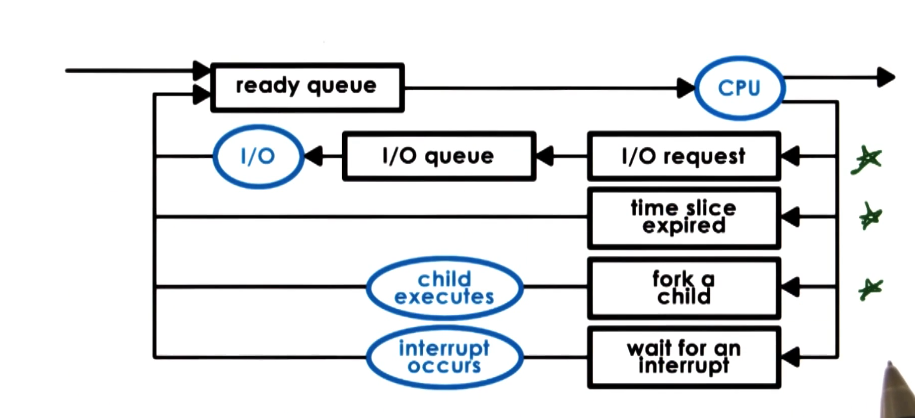

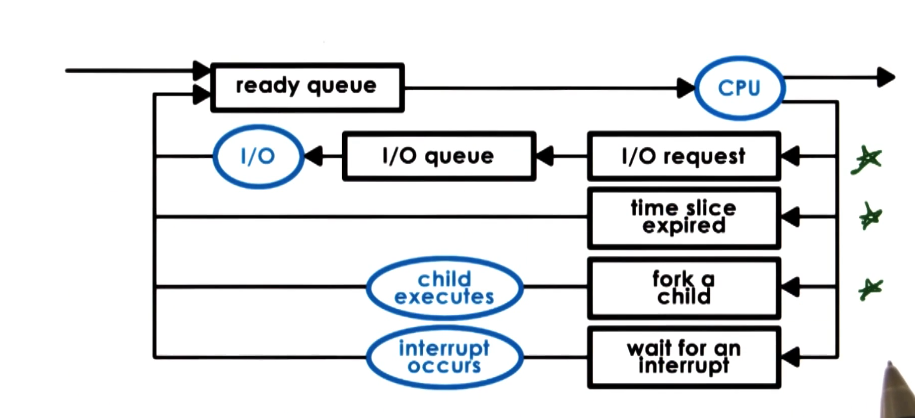

Role of the CPU Scheduler

- For CPU to start executing a process, it must be ready

- However, there will often be multiple process in the ready queue. Need to pick the right one to give to CPU next

- A CPU Scheduler determines which one of the currently ready processes will be dispatched to the CPU to start running, and how long it should run for.

- OS must:

- preempt == interrupt currently executing process and save its context

- schedule == run scheduler to choose next process

- dispatch == dispatch process onto CPU and switch into its context

- BE EFFICIENT ABOUT ALL THIS

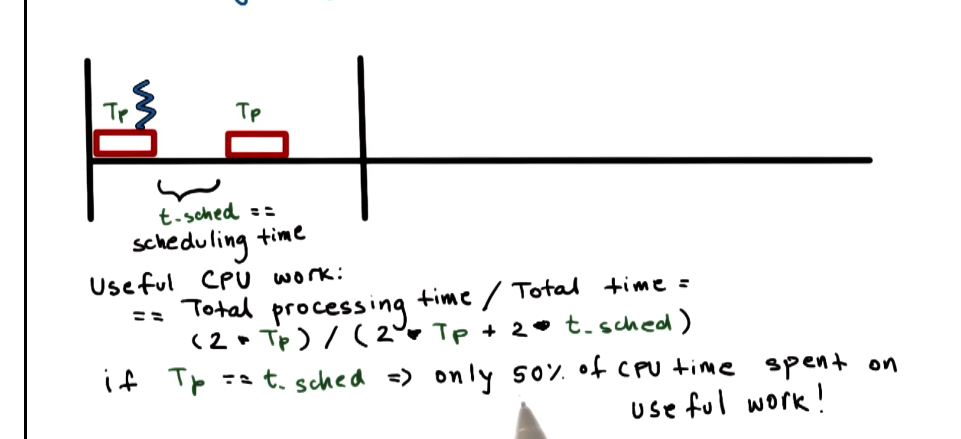

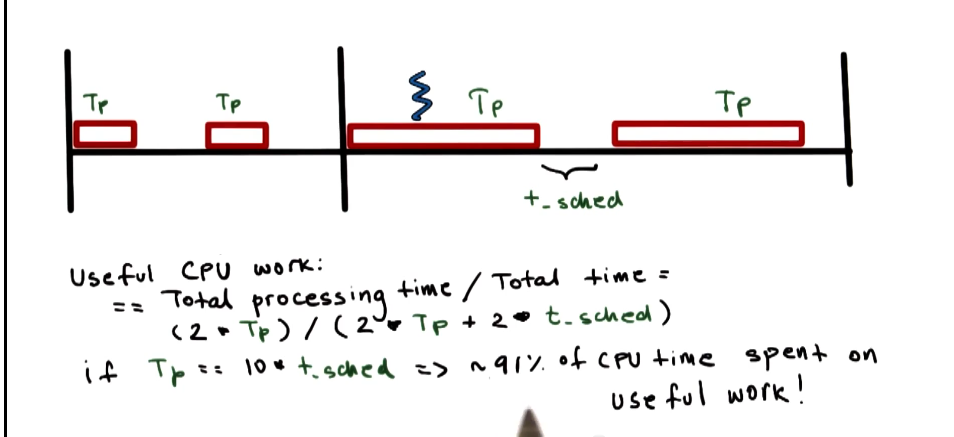

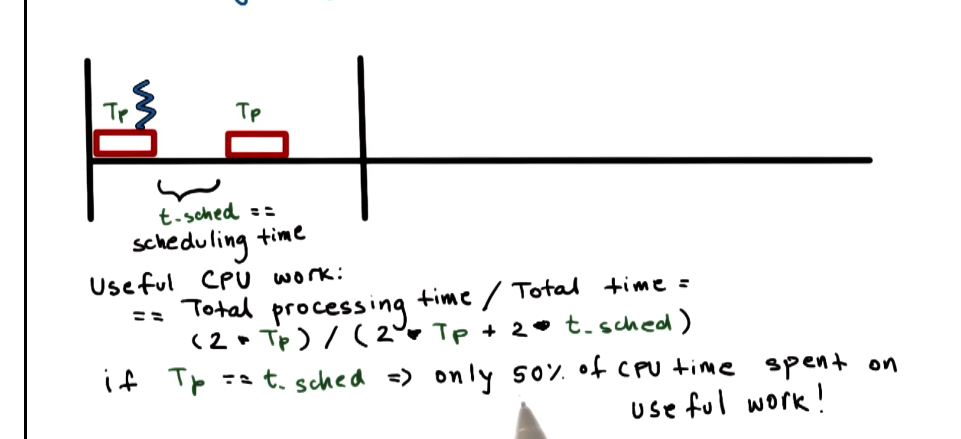

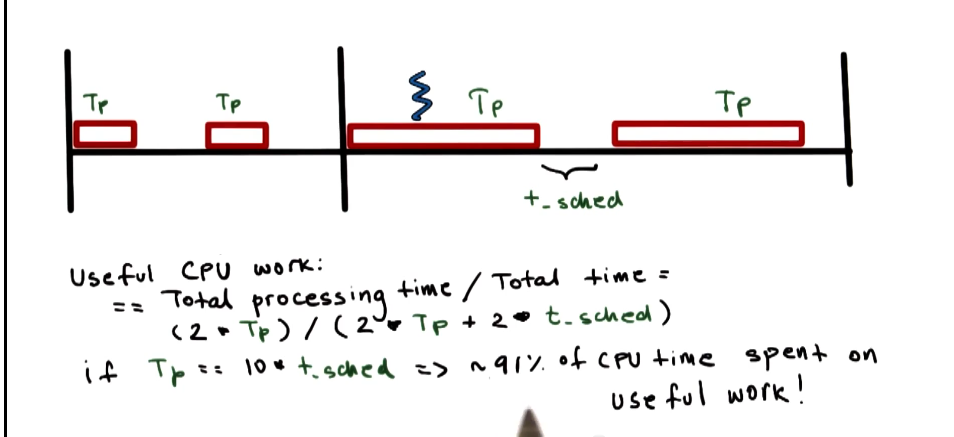

Length of Process

- How long should a process run for?

- How frequently should we run the scheduler?

- More often we run it, more time we spend dinking around with scheduling instead of doing work

- timeslice == time since Tp allocated to a process on the CPU

- Scheduling Design Decisions

- What are appropriate timeslice values?

- Metrics to choose next process to run?

What about I/O?

Inter Process Communication

- Can processes interact? - Yes! OS Must provide

- These days more and more applications are actually multiple processes

- e.g. web server front end and database backend

- OS goes to great lengths to protect and isolate processes from each other. IPC has to be built around these protections.

- Inter-Process Communication (IPC) Mechanisms:

- Transfer data/info between address spaces

- Maintain protection and isolation

- Provide flexibility and performance

- Message-passing IPC:

- OS provides communication channel, like shared buffer

- Processes write/read messages to/from channel

- Pros

- OS manages the channel and provides APIs

- Cons

- Overhead. Lots of extra reads/writes for communication

- Shared memory IPC:

- OS establishes a shared channel and maps it into each process address space

- Processes directly read/write from this memory

- Pros

- Cons

- Reimplement code to handle all the safe reading/writing from shared memory