GIOS Lecture Notes - Part 3 Lesson 3 - Inter-Process Communication

Provided Resources

Inter-Process Communication

- IPC == Os-supported mechanisms for interaction among processes (coordination and communication)

- message passing IPC

- e.g. sockets, pipes, message queues

- memory based IPC

- e.g. shared memory, mmapped files

- higher-level semantics

- a mechanism that supports more than simply a channel for two processes to coordinate or communicate amongst each other

- instead these methods prescribe some additional detail on protocols, formatting, exchange, etc

- e.g. files, RPC

- cover this more later

- synchronization primitives

- communication implies synchronization, and vice versa

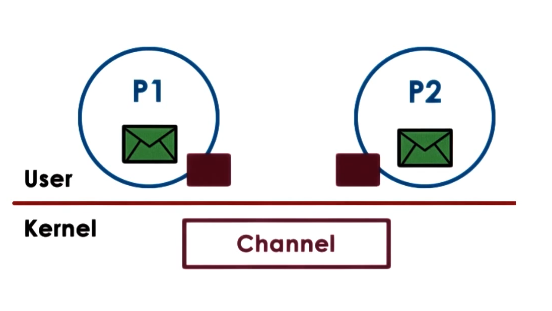

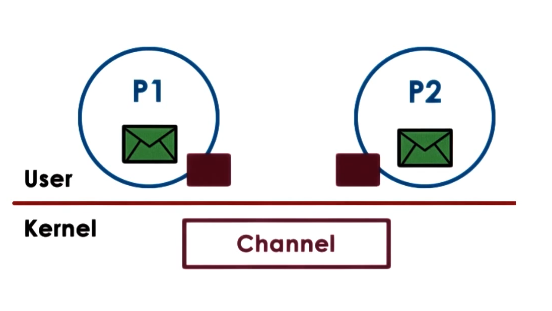

Message Based IPC

- Message passing

- send/recv of messages

- OS creates and maintains a channel

- OS provides interface to processes - a port

- processes can send/write and recv/read messages from a port

- kernel required to

- establish communication

- perform each IPC operation

- send: system call + data copy

- recv: system call + data copy

- request-response requires:

- 4x user/kernel crossings

- 4 data copies

- Cons

- Pros

- simplicity: kernel does channel management and synchronization

- Pipes

- carry byte stream between two processes

- e.g. connect output from one process to input of another process

- Message Queues

- carry “messages” among processes

- OS management includes priorities, scheduling of msg delivery

- APIs

- Sockets

- OS abstraction of ports

- send(), recv() == pass message buffers

- socket() == create kernel-level socket buffer

- associate necessary kernel-level processing (TCP/IP, etc.)

- can be used for different machines, as a channel between process and network device

- if on the same machine, bypass full protocol stack

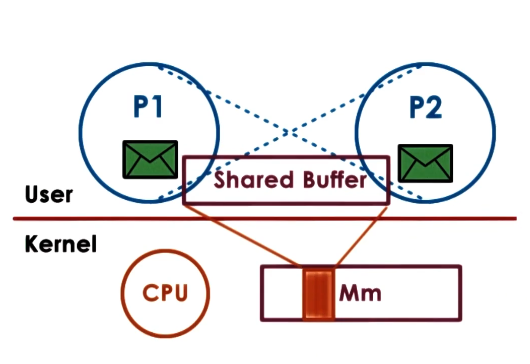

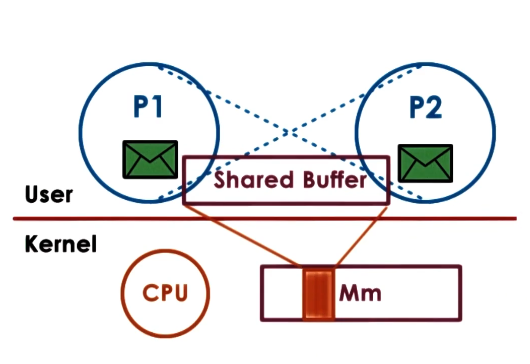

Shared Memory IPC

- Read and write to a shared memory region

- OS establishes shared chnnael between the processes

- Physical pages mapped into virtual adress space

- The virtual address of Process 1 and the virtual address of Process 2 map to the same physical address

- However, VA(P1) does not equal VA(P2)

- Physical memory does not need to be contiguous

- All of this leverages existing memory management support on modern OS’s and hardware

- Pros

- System calls only for setup

- Data copies potentially reduced (but not eliminated)

- Data must be explicitly allocated from shared memory region. Must be copied if not naturally the case

- Cons

- explicit synchronization needed

- Communication protocol must be built and implemented by the developer

- Also management of the shared buffer

- APIs

- Linux supports 2: SysV and POSIX

- Also can use file-based interface such as memory mapped files

- Essentially analogous to the POSIX shared memory API

- Android uses a form called ashmem

Copy vs Map

- Contrast Shared Memory against Message Based IPC

- Goal: transfer data from address space into another target address space

- In message passing, the transfer requires that the CPU copy the data to/from port

- This takes some number of CPU cycles to do

- In shared memory based case CPU cycles are spent mapping memory into the shared address spaces

- The CPU must also copy data into channel when necessary (no user/kernel switches though)

- Worthwhile if the channel is used many times

- may perform well for single use also, if the size of the data is large enough that copying it to/from ports takes longer than mapping address spaces

- e.g. in Windows leverage this difference for small data vs large data for local procedure calls “LPCs” automatically

Shared Memory APIs

- OS supports segments of shared memory. Don’t necessarily correspond to contiguous physical pages

- Shared memory is system-wide. There are system limits on number of segments and total size

- Currently the limits are usually so big as to not be restrictive. Modern hardware is great

- CREATE - When a process requests that shared memory be created, the OS allocates the required amount of physical memory and assigns to it a unique key to identify the segment.

- All communication about this segment will use this key to refer to it.

- ATTACH - Using the key, the segment can be attached by a process. This means that the OS establishes valid mappings between the virtual addresses in the process virtual address space, and the physical memory that backs the segment.

- Multiple processes can attach to the same shared memory segment.

- Each process shares access to the same physical pages.

- Reads and writes to these pages will be visible across the processes.

- The shared memory segment can thus be mapped to different virtual addresses in different processes.

- DETACH - Detaching a process means invalidating the address mappings for the virtual address region that corresponded to that segment within the process.

- In other words the page table entries for those virtual addresses will no longer be valied

- Not the same as destroying a segment. A segment may be detached and re-attached many times during its lifespan

- DESTROY - Explicit request for destruction of segment (or reboot). File will persist until deletion.

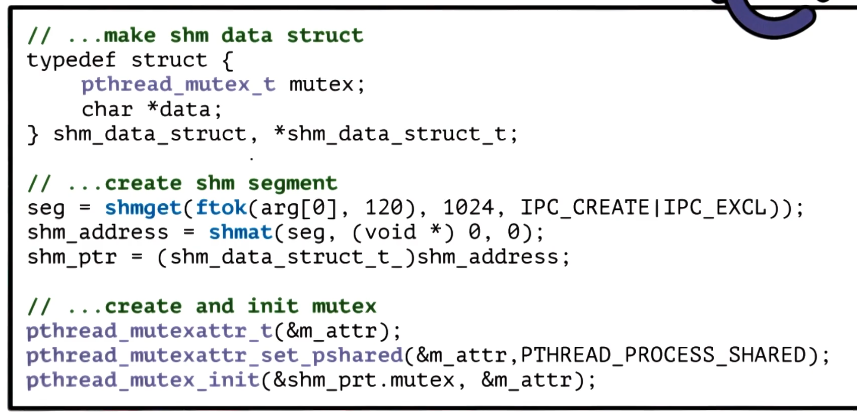

SysV API Versions

- shmget(shmid,size,flag)

- create or open a segment of appropriate size

- ftok (pathname, prg_id)

- same args => same key (like hash function)

- to generate unique segment id

- shmat(shmid, addr, flags)

- addr (NULL -> arbitrary)

- map into user address space

- cast addr to arbitrary type

- shmdt(shmid)

- shmctl(smid, cmd, buf)

POSIX API Versions

- most notable difference is file is used in place of segments

- only exist in tmpfs file system. not a traditional “file”

- allows OS mechanisms for files to be reused here

- e.g. key == file descriptor

- e.g. open()/close()

- shm_open()

- returns file descriptor

- in tmpfs

- mmap() and unmmap()

- mapping virtual to physical addresses

- same commands as memory mapping a file. it’s just a file!

- shm_close()

- can use open()/close() instead. it’s just a file!

- shm_unlink()

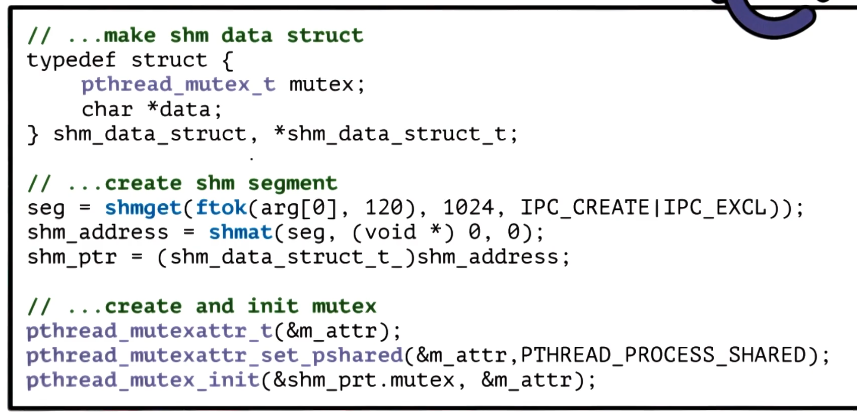

Pthread Sync for IPC

- One of the attributes that is used to specify mutex and condition variable is whether that object is private to a process or shared among processes

- PTHREAD_PROCESS_SHARED

- When syncing shared memory access of pthreads multithreaded processes, we can use mutexes and condvars that have been initialized with this flag

- However, synchronization data structures must also be shared!

- Thus, allocated from shared memory region

- Once allocated, the shared mutexes and condition variables can be used as normal

Other IPC Sync

- shared memory accesses can be synced using OS provided mechanisms for IP interactions

- message queues

- implement mutual exclusion via send/recv

- example protocol

- p1 writes data to shmem, sends “ready” to queue

- p2 receives message, reads data, and sends “ok” message back

- semaphores

- binary semaphore can have 2 values, 0 or 1. functions basically same as mutex

- if value == 0 then stop/blocked

- if value == 1 then decrement(lock) and go/proceed

- ipcs == list all IPC facilities

- -m displays info on shared memory IPC only

- ipcrm == delete IPC facility

- -m [shmid] deletes shm segment with given id

Shared Memory Design Considerations

- Different APIs/mechanisms for synchronization

- OS only provides shared memory. gets out of the way after

- All of the data passing and synchronization protocols are up to the programmer

- How many segments to use?

- 1 large segment

- manager for allocating/freeing mem from shared segment

- many small segments (one for each pairwise communication)

- use pool of segments, queue of segment ids

- pre-create to avoid overhead

- use queue to allow processes to pick off which one they’ll use

- communicate segment IDs among processes

- with a pool of segments, P1 talking to P2 doesn’t necessarily know which segment to look at. need to send message to tell each other.

- What size segments?

- If size of data is known up front and doesn’t change, can just set segment size == data size and call it a day

- This does limit max data size, as segment size is limited by OS

- Segment size < data size

- Transfer data in rounds

- include protocol to track progress

- Probably cast shmem as data structure

- actual data buffer

- sync construct

- flags to track progress

- What if data doesn’t fit?